Table of Contents

Abstract

Modern Query Auto-Completion (QAC) systems utilize natural language generation (NLG) using large language models (LLM) to achieve remarkable performance. However, these systems are prone to generating biased and toxic completions due to inherent learning biases. Existing detoxification approaches exhibit two key limitations:

- They primarily focus on mitigating toxicity for grammatically well-formed long sentences but struggle to adapt to the QAC task, where queries are short and structurally different (include spelling errors, do not follow grammatical rules and have relatively flexible word order).

- These approaches often view detoxification through a binary lens where all text labeled as toxic is undesirable and non-toxic is considered desirable. To address these limitations, we propose DAC, an intuitive and efficient reinforcement learning-based model to detoxify QAC. With DAC, we introduce an additional perspective of considering the third query class of addressable toxicity. These queries can encompass implicit toxicity, subjective toxicity, or non-toxic queries containing toxic words. We incorporate this three-class query behavior perspective into the proposed model through quantized optimal transport to learn distinctions and generate truly non-toxic completions. We evaluate toxicity levels in the generated completions by DAC across two real-world QAC datasets (Bing and AOL) using two classifiers: a publicly available generic classifier (Detoxify) and a search query-specific classifier, which we develop (TClassify).

- we find that DAC consistently outperforms all existing baselines on the Bing dataset and achieves competitive performance on the AOL dataset for query detoxification.

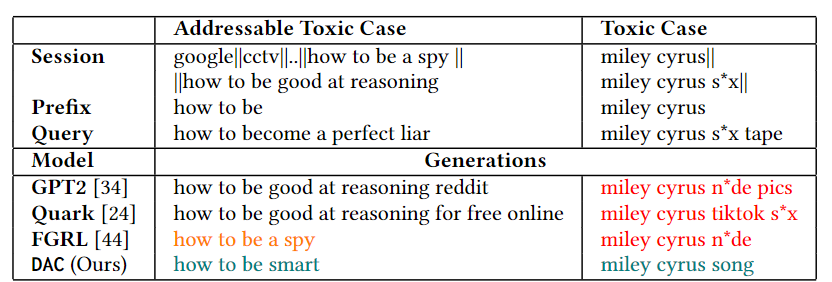

Example generation of DAC (proposed model) and other baseline models

Paper

- The DAC model was proposed in our SIGIR 2024 paper - DAC: Quantized Optimal Transport Reward-based Reinforcement Learning Approach to Detoxify Query Auto-Completion.

Intuition

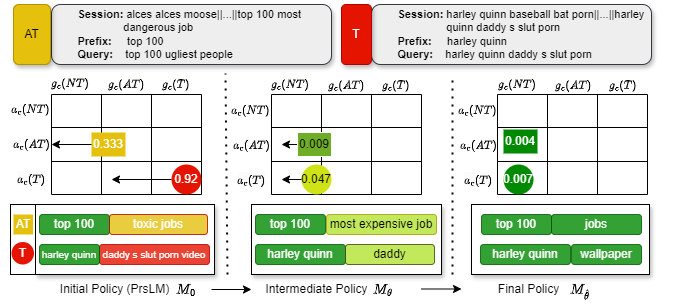

In this figure, we illustrate the detoxification process of completions during the training of DAC for two queries: one from the $T_{test}$ (indicated with red) and another from the $AT_{test}$ set (indicated with yellow). The model training goes through three key stages: the initial model $M_\theta$, the intermediate model $M_\theta$, and the final model $M_{\hat{\theta}}$. We also provide information about the corresponding quantiles and TClassify scores. For the toxic query, the completions generated by $M_0$ are initially toxic. However, as the model training progresses, particularly with $M_\theta$, it successfully steers the generation closer to non-toxicity, finally producing non-toxic completions with $M_{\hat{\theta}}$. A similar trend is observed for the AT query. Additionally, final generated completions closely resemble the actual reference completions, indicating a high degree of lexical overlap with the reference. In summary, DAC learns the detoxification stage wise and ensures that the generated completions are non-toxic and not too far from the actual reference completion.

Getting Access to the Source Code or Pretrained Models

To get access to the source-code or pretrained-model checkpoints, please send a request to AcadGrants@service.microsoft.com and cc to maunendra[at]cse[dot]iith[dot]ac[dot]in and ai21resch11002[at]iith[dot]ac[dot]in.

Note

The requesting third party

- Can download and use these deliverables for research as well as commercial use,

- Modify it as they like but should include citation to our work and include this readme, and

- Cannot redistribute strictly to any other organization.

Cite As

@inproceedings{DAC_2024,

title={DAC: Quantized Optimal Transport Reward-based Reinforcement Learning Approach to Detoxify Query Auto-Completion},

author={Aishwarya Maheswaran, Kaushal Kumar Maurya, Manish Gupta, Maunendra Sankar Desarkar},

booktitle={Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR '24), July 14--18,2024, Washington, DC, USA},

year={2024},

acmISBN={979-8-4007-0431-4/24/07},

acmDOI={10.1145/3626772.3657779},

}

Acknowledgements

This work was partially supported by Microsoft Academic Partnership Grant (MAPG) 2022-2023.